Parquet: Crafting Data Bridges for Efficient Computation

Parquet: Crafting Data Bridges for Efficient Computation

These blog posts are intended to provide software tips, concepts, and tools geared towards helping you achieve your goals. Views expressed in the content belong to the content creators and not the organization, its affiliates, or employees. If you have any questions or suggestions for blog posts, please don’t hesitate to reach out!

Introduction

(Image: Vulphere, Wikimedia Commons)

Apache Parquet is a columnar and strongly-typed tabular data storage format built for scalable processing which is widely compatible with many data models, programming languages, and software systems.

Parquet files (typically denoted with a .parquet filename extension) are typically compressed within the format itself and are often used in embedded or cloud-based high-performance scenarios.

It has grown in popularity since it was introduced in 2013 and is used as a core data storage technology in many organizations.

This article will introduce the Parquet format from a research data engineering perspective.

Understanding the Parquet file format

(Image: Robert Fischbacher163, Wikimedia Commons)

Parquet began around 2013 as work by Twitter and Cloudera collaborators to help solve large data challenges (for example, in Apache Hadoop systems). It was partially inspired by a Google Research publication: “Dremel: Interactive Analysis of Web-Scale Datasets”. Parquet joined the Apache Software Foundation in 2015 as a Top-Level Project (TLP) (link) The format is similar and has related goals to that of the ORC, Avro, and Feather file formats.

One definition for the word “parquet” is: “A wooden floor made of parquetry.” (Wiktionary: Parquet). Parquetry are often used to form decorative geometric patterns in flooring. It seems fitting to name the format this way due to how columns and values are structured (see more below), akin to constructing a beautiful ‘floor’ for your data efforts.

We cover a few pragmatic aspects of the Parquet file format below.

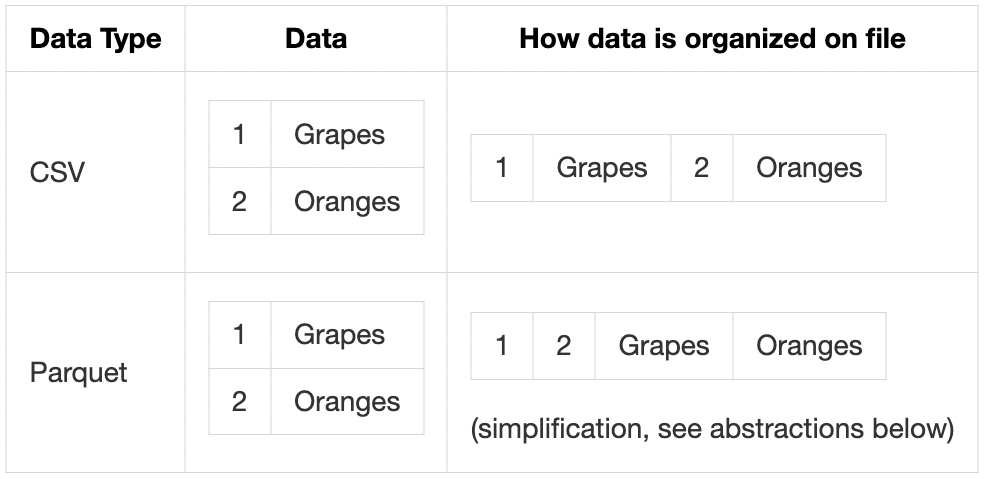

Columnar data storage

Parquet files store data in a “columnar” way which is distinct from other formats. We can understand this columnar format by using plaintext comma-separated value (CSV) format as a reference point. CSV files store data in a row-orientated way by using new lines to represent rows of values. Reading all values of a single column in CSV often involves seeking through multiple other portions of the data by default.

Parquet files are binary in nature, optimizing storage by arranging values from individual columns in close proximity to each other. This enables the data to be stored and retrieved more efficiently than possible with CSV files. For example, Parquet files allow you to query individual columns without needing to traverse non-necessary column value data.

Parquet format abstractions

Row groups, column chunks, and pages

Parquet organizes data using row groups, columns, and pages.

Parquet files organize column data inside of row groups. Each row group includes chunks of columns in the form of pages. Row groups and column pages are configurable and may change depending on the configuration of your Parquet client. Note: you don’t need to be an expert on these details to leverage and benefit from Parquet as these are often configured for default general purposes.

Page encodings

Pages within column chunks may have a number of different encodings.

Parquet encodings are often selected based on the type of data included within columns and the operational or performance needs associated with a project.

By default, Plain (PLAIN) encoding is used which means all values are stored back to back.

Another encoding type, Run Length Encoding (RLE), is often used to efficiently store columns with many consecutively repeated values.

Column encodings are sometimes set for each individual column, usually in an automatic way based on the data involved.

Compression

import os

import pyarrow as pa

from pyarrow import parquet

# create a pyarrow table

table = pa.Table.from_pydict(

{

"A": [1, 2, 3, 4, 5],

"B": ["foo", "bar", "baz", "qux", "quux"],

"C": [0.1, 0.2, 0.3, 0.4, 0.5],

}

)

# Write Parquet file with Snappy compression

parquet.write_table(table=table, where="example.snappy.parquet", compression="SNAPPY")

# Write Parquet file with Zstd compression

parquet.write_table(table=table, where="example.zstd.parquet", compression="ZSTD")

Parquet files can be compressed as they’re written using parameters.

Parquet files may leverage compression to help reduce file size and increase data read performance.

Compression is applied at the page level, combining benefits from various encodings.

Data stored through Parquet is usually compressed when it is written, denoting the compression type through the filename (for example: filename.snappy.parquet).

Snappy is often used as a common compression algorithm for Parquet data.

Brotli, Gzip, ZSTD, LZ4 are also sometimes used.

It’s worth exploring what compression works best for the data and systems you use (for example, ZSTD compression may hold benefits).

“Strongly-typed” data

import pyarrow as pa

from pyarrow import parquet

# create a pyarrow table

table = pa.Table.from_pydict(

{

"A": [1, 2, 3],

"B": ["foo", "bar", 1],

"C": [0.1, 0.2, 0.3],

}

)

# write the pyarrow table to a parquet file

parquet.write_table(table=table, where="example.parquet")

# raises exception:

# ArrowTypeError: Expected bytes, got a 'int' object (for column B)

# Note: while this is an Arrow in-memory data exception, it also

# prevents us from attempting to perform incompatible operations

# within the Parquet file.

Data value must be all of the same type within a Parquet column.

Data within Parquet is “strongly-typed”; specific data types (such as integer, string, etc.) are associated with each column, and thus value. Attempting to store a data value type which does not match the column data type will usually result in an error. This can lead to performance and compression benefits due to how quickly Parquet readers can determine the data type. Strongly-typed data also embeds a kind of validation directly inside your work (data errors “shift left” and are often discovered earlier). See here for more on data quality validation topics we’ve written about.

Complex data handling

import pyarrow as pa

from pyarrow import parquet

# create a pyarrow table with complex data types

table = pa.Table.from_pydict(

{

"A": [{"key1": "val1"}, {"key2": "val2"}],

"B": [[1, 2], [3, 4]],

"C": [

bytearray("😊".encode("utf-8")),

bytearray("🌻".encode("utf-8")),

],

}

)

# write the pyarrow table to a parquet file

parquet.write_table(table=table, where="example.parquet")

# read the schema of the parquet file

print(parquet.read_schema(where="example.parquet"))

# prints:

# A: struct<key1: string, key2: string>

# child 0, key1: string

# child 1, key2: string

# B: list<element: int64>

# child 0, element: int64

# C: binary

Parquet file columns may contain complex data types such as nested types (lists, dictionaries) and byte arrays.

Parquet files may store many data types that are complicated or impossible to store in other formats.

For example, images may be stored using the byte array storage type.

Nested data may be stored using LIST or MAP logical types.

Dates or times may be stored using various temporal data types.

Oftentimes, complex data conversion within Parquet files is already implemented (for example, in PyArrow).

Metadata

import pyarrow as pa

from pyarrow import parquet

# create a pyarrow table

table = pa.Table.from_pydict(

{

"A": [1, 2, 3],

"B": ["foo", "bar", "baz"],

"C": [0.1, 0.2, 0.3],

}

)

# add custom metadata to table

table = table.replace_schema_metadata(metadata={"data-producer": "CU DBMI SET Blog"})

# write the pyarrow table to a parquet file

parquet.write_table(table=table, where="example.snappy.parquet", compression="SNAPPY")

# read the schema

print(parquet.read_schema(where="example.snappy.parquet"))

# prints

# A: int64

# B: string

# C: double

# -- schema metadata --

# data-producer: 'CU DBMI SET Blog'

Metadata are treated as a distinct and customizable components of Parquet files.

The Parquet format treats data about the data (metadata) separately from that of column value data. Parquet metadata includes column names, data types, compression, various statistics about the file, and custom fields (in key-value form). This metadata may be read without reading column value data which can assist with data exploration tasks (especially if the data are large).

Multi-file “datasets”

import pathlib

import pyarrow as pa

from pyarrow import parquet

pathlib.Path("./dataset").mkdir(exist_ok=True)

# create pyarrow tables

table_1 = pa.Table.from_pydict({"A": [1]})

table_2 = pa.Table.from_pydict({"A": [2, 3]})

# write the pyarrow table to parquet files

parquet.write_table(table=table_1, where="./dataset/example_1.parquet")

parquet.write_table(table=table_2, where="./dataset/example_2.parquet")

# read the parquet dataset

print(parquet.ParquetDataset("./dataset").read())

# prints (note that, for ex., [1] is a row group of column A)

# pyarrow.Table

# A: int64

# ----

# A: [[1],[2,3]]

Parquet datasets may be composed of one or many individual Parquet files.

Parquet files may be used individually or treated as a “dataset” through file groups which include the same schema (column names and types). This means you can store “chunks” of Parquet-based data in one or many files and provides opportunities for intermixing or extending data. When reading Parquet data this way libraries usually use the directory as a way to parse all files as a single dataset. Multi-file datasets mean you gain the ability to store arbitrarily large amounts of data by sidestepping, for example, inode limitations.

Apache Arrow memory format integration

import pathlib

import pyarrow as pa

from pyarrow import parquet

# create a pyarrow table

table = pa.Table.from_pydict(

{

"A": [1, 2, 3],

"B": ["foo", "bar", "baz"],

"C": [0.1, 0.2, 0.3],

}

)

# write the pyarrow table to a parquet file

parquet.write_table(table=table, where="example.parquet")

# show schema of table and parquet file

print(table.schema.types)

print(parquet.read_schema("example.parquet").types)

# prints

# [DataType(int64), DataType(string), DataType(double)]

# [DataType(int64), DataType(string), DataType(double)]

Parquet file and Arrow data types are well-aligned.

The Parquet format has robust support and integration with the Apache Arrow memory format. This enables consistency across Parquet integration and how the data are read using various programming languages (the Arrow memory format is relatively uniform across these).

Performance with Parquet

Parquet files often outperforms traditional formats due to how it is designed. Other data file formats may vary in performance contingent on specific configurations and system integration. We urge you to perform your own testing to find out what works best for your circumstances. See below for a list of references which compare Parquet to other formats.

- CSV vs Parquet - Speed up data analytics and wrangling with Parquet files (Posit)

- CSV vs Parquet - Apache Parquet vs. CSV Files (DZone)

- Feather vs Parquet - Feather V2 with Compression Support in Apache Arrow 0.17.0 (Ursa Labs)

- ORC vs Parquet - The impact of columnar file formats on SQL-on-hadoop engine performance: A study on ORC and Parquet (Concurrency and Computation: Practice and Experience)

How can you use Parquet?

The Parquet format is common in many data management platforms and libraries. Below are a list of just a few popular places where you can use Parquet.

-

Python

- Pandas (

pd.DataFrame.to_parquet(),pd.read_parquet()) - Apache Spark (Spark SQL Guide: Parquet Files)

- PyTorch (

ParquetDataFrameLoader) - PyArrow (PyArrow: Reading and Writing the Apache Parquet Format)

- Pandas (

- R

Concluding Thoughts

This article covered the Parquet file format including notable features and usage. Thank you for joining us on this exploration of Parquet. We appreciate your support, hope the content here helps with your data decisions, and look forward to continuing the exploration of data formats in future posts.